k = 5 # NOTE: You can change the value of k via the argument here!

k_fold = KFold(k)

kfold_iteration = enumerate(k_fold.split(x_train, y_train))

metrics = {

"Rand Uniform": {

"acc": [],

"pre": [],

"rec": [],

},

"Rand Binom p={}".format(prior_p): {

"acc": [],

"pre": [],

"rec": [],

},

"Always False": {

"acc": [],

"pre": [],

"rec": [],

},

"Big If": {

"acc": [],

"pre": [],

"rec": [],

},

"Spacy": {

"acc": [],

"pre": [],

"rec": [],

},

}

for k, (train_idx, val_idx) in kfold_iteration:

print("K-cross validation iteration {}".format(k))

x_tr, x_val = x_train[train_idx], x_train[val_idx]

y_tr, y_val = y_train[train_idx], y_train[val_idx]

for model_name, model in [

("Rand Uniform", rand_binom_uniform_classifier),

("Rand Binom p={}".format(prior_p), rand_binom_biased_classifier),

("Always False", always_false_classifier),

("Big If", big_if_classifier),

]:

val_preds = predict(model, x_val)

metrics[model_name]["acc"].append(accuracy_score(y_val, val_preds))

metrics[model_name]["pre"].append(precision_score(y_val, val_preds))

metrics[model_name]["rec"].append(recall_score(y_val, val_preds))

for model_name, model in [("Spacy", nlp)]:

# Reformatting data for spacy text categorizer

train_data = []

for i in range(200):

label = y_tr[i]

input = x_tr[i]

# if i < 5:

# print("Input message: ", input)

# print("Label: ", label)

# print("----")

if label == 1:

train_data.append((input, {"cats": {"spam": 1, "ham": 0}}))

else:

train_data.append((input, {"cats": {"spam": 0, "ham": 1}}))

# Train the model

losses = {}

dropout = 0.1 # NOTE: to prevent overfitting

learning_rate = 0.002 # e.g. 0.001 2e-5

# Load a transformer-based model

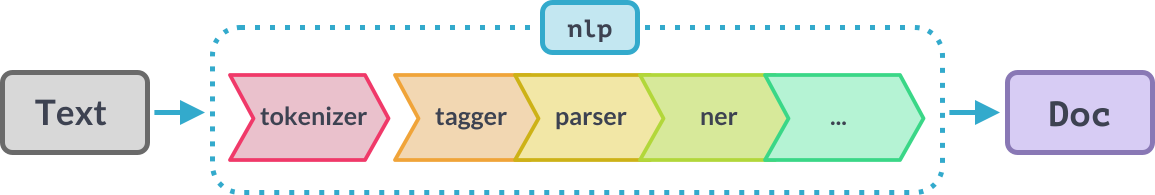

nlp = spacy.load("en_core_web_trf")

# Add a text categorizer to the pipeline if not already present

textcat = None

if "textcat" not in nlp.pipe_names:

textcat = nlp.add_pipe("textcat", last=True)

else:

textcat = nlp.get_pipe("textcat")

# Add labels to the text categorizer

textcat.add_label("spam")

textcat.add_label("ham")

# Remove components from the pipeline if you wish to

print(

"The default English nlp pipe components are: {}.\n".format(nlp.pipe_names)

)

nlp.remove_pipe("lemmatizer")

nlp.remove_pipe("tagger")

nlp.remove_pipe("parser")

nlp.remove_pipe("ner")

nlp.initialize()

optimizer = Adam(

learn_rate=learning_rate,

beta1=0.9,

beta2=0.999,

eps=1e-08,

L2=1e-6,

# grad_clip=1.0,

use_averages=True,

L2_is_weight_decay=True,

)

for i in range(4): # NOTE: change the number of iterations as needed

random.shuffle(train_data)

batches = minibatch(train_data, size=8)

for batch in batches:

texts, annotations = zip(*batch)

examples = [

Example.from_dict(nlp.make_doc(text), ann)

for text, ann in zip(texts, annotations)

]

nlp.update(examples, sgd=optimizer, drop=dropout, losses=losses)

print(f"Losses at iteration {i}: {losses}")

val_preds = predict_spacy(nlp, x_val)

metrics[model_name]["acc"].append(accuracy_score(y_val, val_preds))

metrics[model_name]["pre"].append(precision_score(y_val, val_preds))

metrics[model_name]["rec"].append(recall_score(y_val, val_preds))