The following classifier will be a classifier trained with spacy that you will learn later on in the course. For now focus on the evaluation & some cool aspects of this package.

You may have noticed that our tokenization is too simple. "money" could be written right before a comma, and apostrophes might also create duplicate tokens that actually refer to the same word. Spacy provides a tokenizer for different languages that takes care of such edge cases.

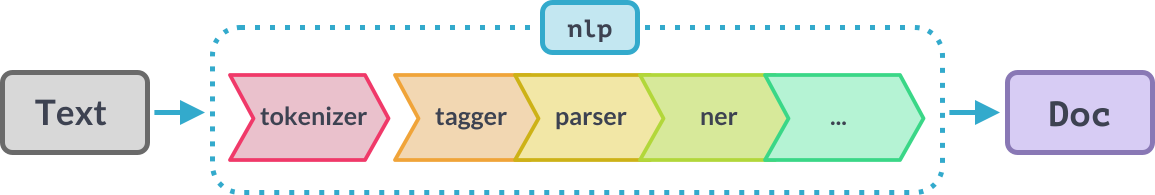

And that's not only it! The framework is organized as a pipeline that takes a plain string text and turns it into a featurized Doc. Document here doesn't mean multiple sentences. You can think of Doc as an augmentation of the plain text into meaningful linguistic features that can be better signals for classification tasks. Here is a possible set of components to make up the spacy nlp pipeline:

Notice that the tokenizer is separate. This is because for a given language, spaCy has only one tokenizer. As said on the website:

[...] while all other pipeline components take a Doc and return it, the tokenizer takes a string of text and turns it into a Doc

So let's create an English pipeline with only the tokenizer by disabling the rest of the components.